The team redefining how skills are measured.

Kian Katanforoosh

Founder & CEO

Kian leads Workera’s scientific vision, shaping how advances in AI can deepen our understanding of human capability at scale. His focus is pushing the frontier—then translating those advances into systems that are interpretable, accountable, and fit for consequential decisions.

As founder and CEO, he bridges frontier AI research with the realities of building products that must earn trust in the real world—ensuring innovation is paired with clarity, responsibility, and measurable impact.

AI leadership at global scale

Co-creator of Deep Learning Specialization with Andrew Ng

Adjunct professor at Stanford University

Recognized for translating AI research into real-world systems

World Economic Forum Technology Pioneer (2025)

Walter J. Gores Award for Excellence in Teaching (Stanford)

Forbes 30 Under 30 (Education)

Dr. Taylor Sullivan

VP of Product and Assessment Science

Taylor leads the end-to-end design of Workera’s assessment system, translating modern assessment science into scalable, AI-native products. She ensures skills are defined, measured, and interpreted in ways enterprises can trust across high-stakes talent decisions.

15+ years designing high-stakes assessment systems

Former product and assessment leader at Codility and HumRRO

Expert in evidence-centered design and AI-enabled measurement

Dr. Marian Pitel-Wali

Senior Assessment Scientist

Marian leads the scientific rigor behind Workera’s AI-driven assessments, ensuring innovation scales without sacrificing validity, fairness, or transparency. She governs how assessments are built, scored, monitored, and reviewed as AI plays a larger role in skill evaluation.

10+ years across assessment science, software, and AI

Expert in human-in-the-loop measurement and review systems

Co-founder of an assessment technology company deployed at scale

Andrew Ng

Chairman of the Board

Andrew provides strategic guidance to Workera on the responsible use of AI in education and workforce development. A globally recognized AI leader, he brings deep technical expertise translating research into real-world systems at scale.

Founder of DeepLearning.AI and co-founder of Coursera

Former Chief Scientist at Baidu and founding lead of Google Brain

Author of 200+ research papers; named to the TIME100 AI list

AI should strengthen measurement, not shortcut it.

Better evidence

AI unlocks richer, more direct evidence of skill—through realistic scenarios, interactive tasks, and multiple response modalities that reflect how work is actually done, not how knowledge is guessed or recalled.

Rigor at scale

We embed assessment science into the product so rigor scales with the system. Clear constructs, evidence-centered design, and governed scoring prevent AI from introducing noise as measurement expands across roles and use cases.

AI at the pace of the real world

Because skills and roles evolve quickly, measurement must evolve with them. Continuous monitoring and scientist-led iteration keep signals accurate and defensible as work changes.

Built for innovation with evidence.

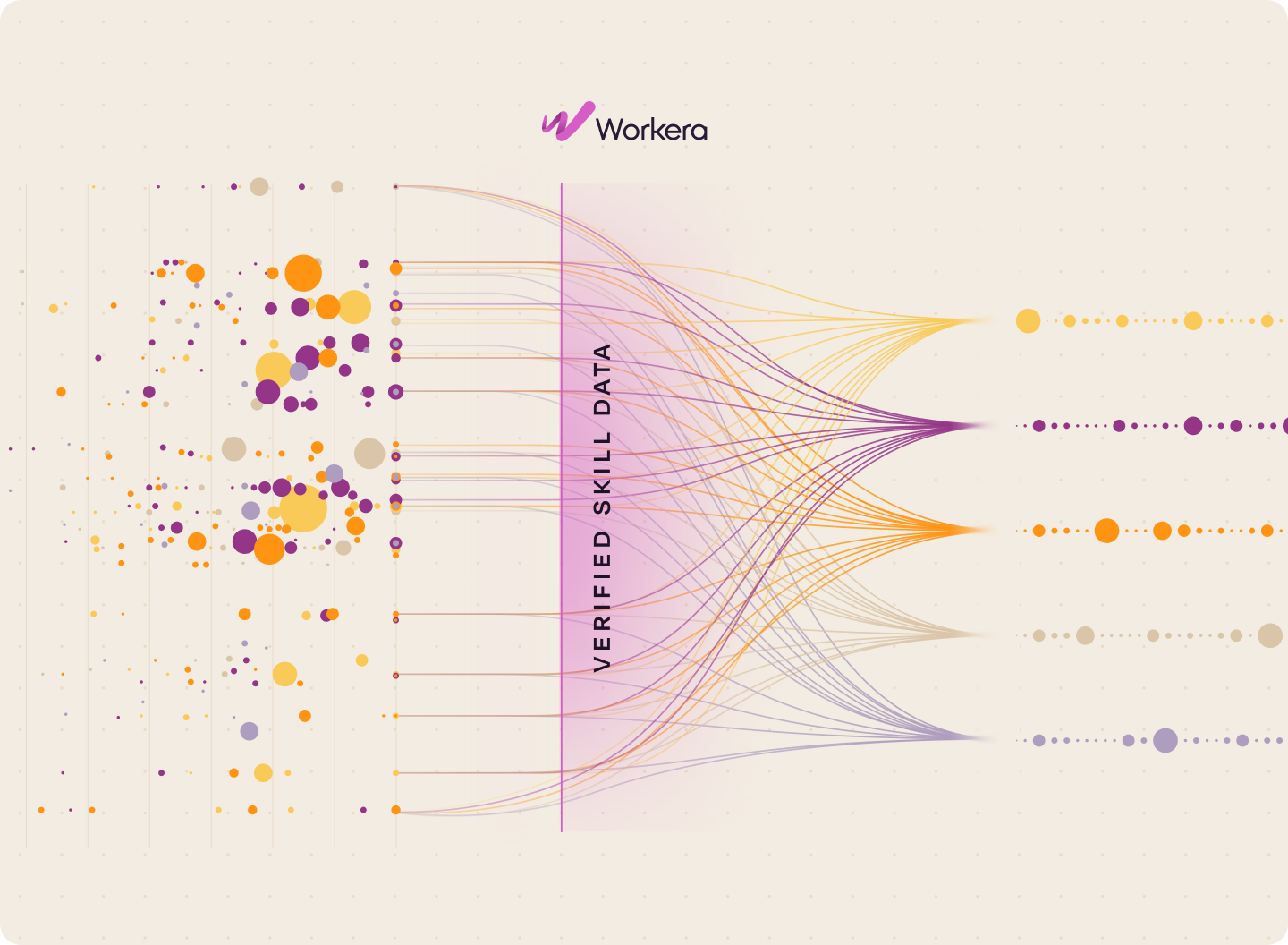

Workera’s skill verification system follows an end-to-end lifecycle designed for accuracy, consistency, and durability at enterprise scale.

Define skill frameworks

Clear constructs and proficiency levels grounded in expert input and real-world relevance — so we measure the right skills, not convenient proxies.

Design real-world tasks

Scenario-based work elicits observable evidence of applied skill — so people demonstrate what they can actually do in context.

Generate & validate

Tasks are refined using evidence-centered design, accessibility review, and bias evaluation — so assessments are defensible before they ever scale.

Expert-grounded scoring

Responses are scored using task-appropriate methods—deterministic where answers are fixed, expert-aligned where judgment is required—ensuring consistent, explainable, and auditable results.

Monitor & evolve

Continuous reviews track reliability, system performance, and construct stability as systems scale — so accuracy holds as roles, data, and work change.

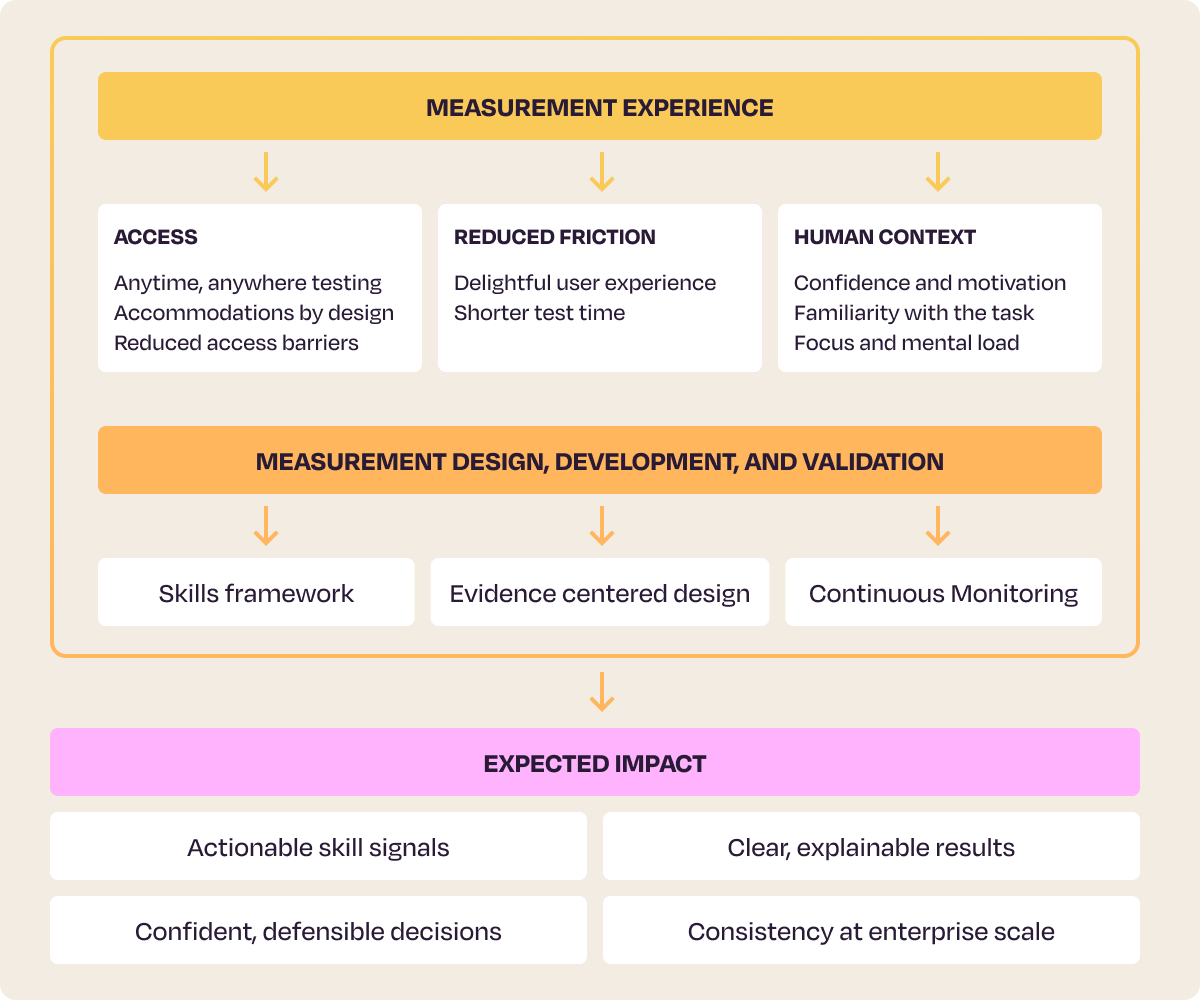

Experience design is a measurement decision.

Clear language and guided reasoning help people show what they know without guesswork.

Multiple response options and accessibility support make assessments usable across different abilities and contexts.

Immersive assessments

Built-in consistency checks keep scoring stable and equitable over time, with a clear appeal path for human review when appropriate.

Consistent AI scoring with human review ensures stable, reviewable outcomes at scale.

Responsible AI built to inform high-stakes decisions.

Transparent scoring

Deterministic prompts keep reasoning traceable and suppress unsupported model output.

Human oversight

Review pathways and contestability ensure humans remain involved in consequential decisions.

Continuous monitoring

Drift checks, stability reviews, and scoring audits detect changes before they impact results.

Strong governance

Model and prompt versioning, traceability logs, and strict access controls.

Verified skills that drive real decisions.

Clear, interpretable insights

Every skill result comes with transparent evidence—showing what was measured, how it was scored, and what it means for readiness.

Targeted development

Learners receive personalized, role-relevant recommendations grounded in their verified skill gaps and strengths.

Mobility and career pathways

Skill signals reveal who is ready for new roles, where reskilling is needed, and how teams can grow from within.

Team and capability intelligence

Leaders see consistent, comparable skill data across roles and functions—supporting talent planning and project staffing.

Organizational visibility

Aggregated insights help teams understand workforce strengths, identify capability gaps, and guide investment in learning and transformation efforts.

Progress you can measure

Longitudinal skill signals show growth over time—revealing improvement, proficiency gains, and where support is still needed.

FAQ

Workera defines skill measurement as the process of capturing demonstrated capability through observable behavior, not through proxies such as self-report, job titles, or learning activity.

Measurement focuses on how individuals reason, decide, and act in realistic, job-relevant scenarios. Each interaction produces evidence that contributes to a clearer, more interpretable view of skill proficiency and confidence. This evidence-based approach allows skills to be measured in a way that supports meaningful interpretation and real-world decision-making.

In Workera’s measurement system, validity refers to whether skill signals support the decisions they are used to make.

Validity is not a property of a test in isolation. It is evaluated by examining how individuals respond to tasks, how signals behave over time, how closely measurement aligns to real work, and the consequences of using those signals in practice. Workera follows a modern, unitary view of validity, integrating multiple sources of evidence to ensure skill interpretations are appropriate, trustworthy, and defensible for their intended use.

Proxies such as course completion, job history, or self-assessment provide indirect signals about skill exposure, not proof of capability.

Workera focuses on evidence because enterprise decisions require confidence that skills are actually present and applicable. Direct evidence reduces noise, improves interpretability, and enables consistent comparison across people, roles, and time. This is especially important as the stakes of workforce decisions increase.

At Workera, AI is used to strengthen measurement, not to shortcut it.

AI supports the generation of job-relevant scenarios, the orchestration of conversational measurement moments, and the consistent evaluation of skill signals at scale. Crucially, AI operates within measurement-science guardrails: signals remain interpretable, reviewable, and grounded in evidence. This allows Workera to scale skill verification while maintaining trust and defensibility.

Skills data increasingly informs decisions about readiness, investment, and execution. When measurement lacks rigor, those decisions become risky.

Rigorous, evidence-based measurement ensures that skill signals remain meaningful as roles evolve, organizations scale, and expectations change. This is what allows skills data to function as a reliable system of record, supporting decisions that must be explained, audited, and defended over time.